SharePoint Online: Find All Files Exceeding the Maximum URL Length Limitation using PowerShell

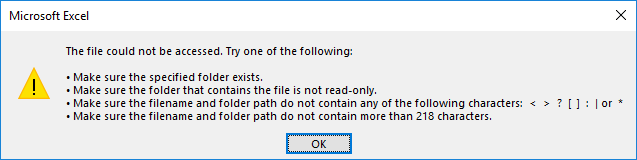

Microsoft Office Excel files stored in deep Folders, sub-folder structures with long names often result in an error when they exceed the maximum file URL length of 218 characters, as in my other article SharePoint Online: URL Length Limitation of 218 Characters in Excel Files. So, we wanted to proactively scan the entire SharePoint Online environment for Excel files with long URLs.

PowerShell to Get All Excel Files that Exceed the Maximum Character Limit URL

This PowerShell script scans Excel files in all document libraries in a given site collection, checks if any file URL length exceeds the given limit, and exports the output report to a CSV format.

Import-Module Microsoft.Online.SharePoint.PowerShell -DisableNameChecking

#Function to scan all files with long file names in a site

Function Scan-SPOLongFilePath($SiteURL)

{

Try {

#Setup the context

$Ctx = New-Object Microsoft.SharePoint.Client.ClientContext($SiteURL)

$Ctx.Credentials = New-Object Microsoft.SharePoint.Client.SharePointOnlineCredentials($Cred.UserName,$Cred.Password)

#Get the web from given URL and its subsites

$Web = $Ctx.web

$Ctx.Load($Web)

$Ctx.Load($Web.Lists)

$Ctx.Load($web.Webs)

$Ctx.executeQuery()

#Arry to Skip System Lists and Libraries

$SystemLists = @("Converted Forms", "Master Page Gallery", "Customized Reports", "Form Templates", "List Template Gallery", "Theme Gallery",

"Reporting Templates", "Solution Gallery", "Style Library", "Web Part Gallery","Site Assets", "wfpub", "Site Pages", "Images")

Write-host -f Yellow "Processing Site: $SiteURL"

#Prepare the CAML query

$Query = New-Object Microsoft.SharePoint.Client.CamlQuery

$Query.ViewXml = "@

<View Scope='RecursiveAll'>

<Query>

<OrderBy><FieldRef Name='ID' Ascending='TRUE'/></OrderBy>

</Query>

<RowLimit Paged='TRUE'>2000</RowLimit>

</View>"

#Filter Document Libraries to Scan

$Lists = $Web.Lists | Where {$_.BaseType -eq "DocumentLibrary" -and $_.Hidden -eq $false -and $SystemLists -notcontains $_.Title}

#Loop through each document library

Foreach ($List in $Lists)

{

$Counter=1

#Batch Process List items

Do {

$ListItems = $List.GetItems($Query)

$Ctx.Load($ListItems)

$Ctx.ExecuteQuery()

$Query.ListItemCollectionPosition = $ListItems.ListItemCollectionPosition

If($ListItems.count -gt 0)

{

Write-host -f Cyan "`t Processing Document Library: '$($List.Title)', Auditing $($ListItems.Count) Item(s)"

$DocumentInventory = @()

#Iterate through each file and get data

Foreach($Item in $ListItems | Where {$_.FileSystemObjectType -eq "File"})

{

#Display a Progress bar

Write-Progress -Activity "Scanning Files in the Library" -Status "Testing if the file has long URL '$($Item.FieldValues.FileRef)' ($Counter of $($List.ItemCount))" -PercentComplete (($Counter / $List.ItemCount) * 100)

$File = $Item.File

$Ctx.Load($File)

$Ctx.ExecuteQuery()

#calculate the Absolute encoded URL of the File

If($Web.ServerRelativeUrl -eq "/")

{

$AbsoluteURL= $("{0}{1}" -f $Web.Url, $ListItem.FieldValues["FileRef"])

}

else

{

$AbsoluteURL= $("{0}{1}" -f $Web.Url.Replace($Web.ServerRelativeUrl,''), $Item.FieldValues["FileRef"])

}

$AbsoluteURL = [uri]::EscapeUriString($AbsoluteURL)

If($AbsoluteURL.length -gt $MaxUrlLength)

{

Write-host "`t`tFound a Long File URL at '$AbsoluteURL'" -f Green

#Collect document data

$DocumentData = New-Object PSObject

$DocumentData | Add-Member NoteProperty SiteURL($SiteURL)

$DocumentData | Add-Member NoteProperty DocLibraryName($List.Title)

$DocumentData | Add-Member NoteProperty FileName($File.Name)

$DocumentData | Add-Member NoteProperty FileURL($AbsoluteURL)

$DocumentData | Add-Member NoteProperty CreatedBy($Item["Author"].Email)

$DocumentData | Add-Member NoteProperty CreatedOn($File.TimeCreated)

$DocumentData | Add-Member NoteProperty ModifiedBy($Item["Editor"].Email)

$DocumentData | Add-Member NoteProperty LastModifiedOn($File.TimeLastModified)

$DocumentData | Add-Member NoteProperty Size-KB([math]::Round($File.Length/1KB))

#Add the result to an Array

$DocumentInventory += $DocumentData

}

$Counter++

}

}

}While($Query.ListItemCollectionPosition -ne $Null)

#Export the result to CSV file

$DocumentInventory | Export-CSV $ReportOutput -NoTypeInformation -Append

}

#Iterate through all subsites of the current site

ForEach ($Subweb in $Web.Webs)

{

#Call the function recursively

Scan-SPOLongFilePath($Subweb.url)

}

}

Catch {

write-host -f Red "Error Scaning Document Library Inventory!" $_.Exception.Message

}

}

#Set Parameters

$SiteURL= "https://crescent.sharepoint.com/sites/Marketing"

$ReportOutput="C:\temp\LongFileNames.csv"

$FileExtension = "xlsx"

$MaxUrlLength = 259

#Get Credentials to connect

$Cred = Get-Credential

#Delete the Output Report if exists

If (Test-Path $ReportOutput) { Remove-Item $ReportOutput }

#Call the function

Scan-SPOLongFilePath $SiteURL

For lists and libraries with < 5000 items, this script works fine. Let’s re-code in PnP PowerShell to handle bigger document libraries.

PnP PowerShell to Audit SharePoint Online Site Collection for Long URLs

This PnP PowerShell script scans the given site collection for long files and folders and exports its findings to a CSV file.

#Parameters

$SiteURL = "https://crescent.SharePoint.com/sites/Marketing"

$MaxUrlLength = 218

$CSVPath = "C:\Temp\LongURLInventory.csv"

$global:LongURLInventory = @()

$Pagesize = 2000

#Function to scan and collect long files

Function Get-PnPLongURLInventory

{

[cmdletbinding()]

param([parameter(Mandatory = $true, ValueFromPipeline = $true)] $Web)

Write-host "Scanning Files with Long URL in Site '$($Web.URL)'" -f Yellow

If($Web.ServerRelativeUrl -eq "/")

{

$TenantURL= $Web.Url

}

Else

{

$TenantURL= $Web.Url.Replace($Web.ServerRelativeUrl,'')

}

#Get All Large Lists from the Web - Exclude Hidden and certain lists

$ExcludedLists = @("Form Templates", "Preservation Hold Library","Site Assets", "Pages", "Site Pages", "Images",

"Site Collection Documents", "Site Collection Images","Style Library")

#Get All Document Libraries from the Web

$Lists= Get-PnPProperty -ClientObject $Web -Property Lists

$Lists | Where-Object {$_.BaseType -eq "DocumentLibrary" -and $_.Hidden -eq $false -and $_.Title -notin $ExcludedLists -and $_.ItemCount -gt 0} -PipelineVariable List | ForEach-Object {

#Get Items from List

$global:counter = 0;

$ListItems = Get-PnPListItem -List $_ -PageSize $Pagesize -Fields Author, Created, File_x0020_Type -ScriptBlock { Param($items) $global:counter += $items.Count; Write-Progress -PercentComplete ($global:Counter / ($_.ItemCount) * 100) -Activity "Getting List Items of '$($_.Title)'" -Status "Processing Items $global:Counter to $($_.ItemCount)";}

$LongListItems = $ListItems | Where { ([uri]::EscapeUriString($_.FieldValues.FileRef).Length + $TenantURL.Length ) -gt $MaxUrlLength }

Write-Progress -Activity "Completed Retrieving Items from List $($List.Title)" -Completed

If($LongListItems.count -gt 0)

{

#Get Root folder of the List

$Folder = Get-PnPProperty -ClientObject $_ -Property RootFolder

Write-host "`tFound '$($LongListItems.count)' Items with Long URLs at '$($Folder.ServerRelativeURL)'" -f Green

#Iterate through each long url item and collect data

ForEach($ListItem in $LongListItems)

{

#Calculate Encoded Full URL of the File

$AbsoluteURL = "$TenantURL$($ListItem.FieldValues.FileRef)"

$EncodedURL = [uri]::EscapeUriString($AbsoluteURL)

#Collect document data

$global:LongURLInventory += New-Object PSObject -Property ([ordered]@{

SiteName = $Web.Title

SiteURL = $Web.URL

LibraryName = $List.Title

LibraryURL = $Folder.ServerRelativeURL

ItemName = $ListItem.FieldValues.FileLeafRef

Type = $ListItem.FileSystemObjectType

FileType = $ListItem.FieldValues.File_x0020_Type

AbsoluteURL = $AbsoluteURL

EncodedURL = $EncodedURL

UrlLength = $EncodedURL.Length

CreatedBy = $ListItem.FieldValues.Author.LookupValue

CreatedByEmail = $ListItem.FieldValues.Author.Email

CreatedAt = $ListItem.FieldValues.Created

ModifiedBy = $ListItem.FieldValues.Editor.LookupValue

ModifiedByEmail = $ListItem.FieldValues.Editor.Email

ModifiedAt = $ListItem.FieldValues.Modified

})

}

}

}

}

#Connect to Site collection

Connect-PnPOnline -Url $SiteURL -Interactive

#Call the Function for all Webs

Get-PnPSubWeb -Recurse -IncludeRootWeb | ForEach-Object { Get-PnPLongURLInventory $_ }

#Export Documents Inventory to CSV

$Global:LongURLInventory | Export-Csv $CSVPath -NoTypeInformation

Write-host "Report has been Exported to '$CSVPath'" -f Magenta

How about getting this inventory for the Entire SharePoint Online Tenant?

This time, let’s scan all files across all site collections in the entire tenant for all files with long file URLs.

#Parameters

$Domain = "crescent" #Domain Name in SharePoint Online. E.g. https://Crescent.SharePoint.com

$MaxUrlLength = 218

$CSVFile = "C:\Temp\LongURLInventory.csv"

$Pagesize = 2000

#Frame Tenant URL and Tenant Admin URL

$TenantURL = "https://$Domain.SharePoint.com"

$TenantAdminURL = "https://$Domain-Admin.SharePoint.com"

#Function to scan and collect long files

Function Get-PnPLongURLInventory

{

[cmdletbinding()]

param([parameter(Mandatory = $true, ValueFromPipeline = $true)] $Web)

Write-host "Scanning Files with Long URL in Site '$($Web.URL)'" -f Yellow

#Get All Large Lists from the Web - Exclude Hidden and certain lists

$ExcludedLists = @("Form Templates", "Preservation Hold Library","Site Assets", "Pages", "Site Pages", "Images",

"Site Collection Documents", "Site Collection Images","Style Library")

#Get All Document Libraries from the Web

$Lists= Get-PnPProperty -ClientObject $Web -Property Lists

$Lists | Where-Object {$_.BaseType -eq "DocumentLibrary" -and $_.Hidden -eq $false -and $_.Title -notin $ExcludedLists -and $_.ItemCount -gt 0} -PipelineVariable List | ForEach-Object {

#Get Items from List

$global:counter = 0;

$LongURLInventory = @()

$ListItems = Get-PnPListItem -List $_ -PageSize $Pagesize -Fields Author, Created, File_x0020_Type -ScriptBlock { Param($items) $global:counter += $items.Count; Write-Progress -PercentComplete ($global:Counter / ($_.ItemCount) * 100) -Activity "Getting List Items of '$($_.Title)'" -Status "Processing Items $global:Counter to $($_.ItemCount)";}

$LongListItems = $ListItems | Where { ([uri]::EscapeUriString($_.FieldValues.FileRef).Length + $TenantURL.Length ) -gt $MaxUrlLength }

If($LongListItems.count -gt 0)

{

#Get Root folder of the List

$Folder = Get-PnPProperty -ClientObject $_ -Property RootFolder

Write-host "`tFound '$($LongListItems.count)' Item(s) with Long URLs at '$($Folder.ServerRelativeURL)'" -f Green

#Iterate through each long url item and collect data

ForEach($ListItem in $LongListItems)

{

#Calculate Encoded Full URL of the File

$AbsoluteURL = "$TenantURL$($ListItem.FieldValues.FileRef)"

$EncodedURL = [uri]::EscapeUriString($AbsoluteURL)

#Collect document data

$LongURLInventory += New-Object PSObject -Property ([ordered]@{

SiteName = $Web.Title

SiteURL = $Web.URL

LibraryName = $List.Title

LibraryURL = $Folder.ServerRelativeURL

ItemName = $ListItem.FieldValues.FileLeafRef

Type = $ListItem.FileSystemObjectType

FileType = $ListItem.FieldValues.File_x0020_Type

AbsoluteURL = $AbsoluteURL

EncodedURL = $EncodedURL

UrlLength = $EncodedURL.Length

CreatedBy = $ListItem.FieldValues.Author.LookupValue

CreatedByEmail = $ListItem.FieldValues.Author.Email

CreatedAt = $ListItem.FieldValues.Created

ModifiedBy = $ListItem.FieldValues.Editor.LookupValue

ModifiedByEmail = $ListItem.FieldValues.Editor.Email

ModifiedAt = $ListItem.FieldValues.Modified

})

}

#Export Documents Inventory to CSV

$LongURLInventory | Export-Csv $CSVFile -NoTypeInformation -Append

}

Write-Progress -Activity "Completed Exporting Long URL Items from List $($_.Title)" -Completed

}

}

#Connect to Admin Center

Connect-PnPOnline -Url $TenantAdminURL -Interactive

#Delete the Output report file if exists

If (Test-Path $CSVFile) { Remove-Item $CSVFile }

#Get All Site collections - Filter BOT and MySite Host

$Sites = Get-PnPTenantSite -Filter "Url -like '$TenantURL'"

#Iterate through all site collections

$Sites | ForEach-Object {

#Connect to each site collection

Connect-PnPOnline -Url $_.URL -Interactive

#Call the Function for Webs

Get-PnPSubWeb -Recurse -IncludeRootWeb | ForEach-Object { Get-PnPLongURLInventory $_ }

}

Please note, Although the Microsoft documentation says it’s 218 characters, I found up to 259 works fine!

Hi Saludeen, love your work.

Just one note on this script, I am trying to use it to find filepath lengths that are near the 400 character limit, but the thing I noticed is this script produces the encoded “%20″ instead of decoded ” ” but reading the documentation I believe the 400 character limit is based on the decoded length of the path.

It seems like a note on this and a suggested modification to use decoded version could be useful for lots of people. Just an idea I had, but as I said love and appreciate your work!

I take it all back. I hadn’t looked closely enough at the output sheet and didn’t notice the decoded URL column. It’s perfect. I feel a fool for ever doubting the King. This script is perfect. I love it.

Hey mate,

Thanks for those scripts, do you have scripts for finding long path but on the on-prem file share server ?

I have a large data contend in a Library, but I need filter only in a folder recursivelly in a specific library, How I can to do that?

I try filter with -FolderServerRelativeUrl but isn’t works

Can you tell me other way to search on for example https://site.sharepoint.com/sites/test/FolderX

Recurselly

Thanks a lot.

The “-FolderServerRelativeUrl” doesn’t work with Larger lists and Libraries! Use:

#Get all files from folder Get-PnPListItem -List $ListName -PageSize 2000 | Where {$_.FieldValues.FileRef -like $FolderServerRelativePath -and $_.FileSystemObjectType -eq "File" } | ForEach-Object { Write-host $_.FieldValues.FileRef }Help! Error Scaning Document Library Inventory! Exception calling “ExecuteQuery” with “0” argument(s): “The attempted operation is prohibited because it exceeds the list view threshold.”

Post has been updated with the Script to handle larger libraries!

Perhaps I have the site name incorrect, but when I run this against my sharepoint, it asks for credentials, I type them in, then it says Processing Site: etc etc, then it goes directly to PS C:\Windows\system32 and no output file is generated?

The data should be exported to the file specified in $CSVFile variable. So, set this variable with the path to your CSV.

is this script gets the word files details as well ??

Yes! Script scans for all file types and gets files with long URLs.

hi its observed then when i upload files and folders with deep urls they fail in Powershell… I understand the reason, but is there a way out that you know of?